HIGH AVAILABILITY

Since that communication controls our daily lives, the demand of reliable, stable and high-performance infrastructures that designed to serve critical systems and ensures its stability increased; High Availability and scalability are now a must, as high availability is a quality of infrastructure design at scale that addresses the decreasing of downtime, eliminating single point of failure and handling increased system loads.

DATABASE CLUSTERS

KEEP DATABASES CONSISTENT AND AVAILABLE AT ALL TIMES

Active-passive database clusters offer the accessibility of centralized storage, plus the high availability ensured by failover to redundant hot copies of your data in the event of your active database failing

Read more about database clustering

LOAD BALANCING

KEEP APPLICATIONS AVAILABLE AT ALL TIMES.

Load balancing shares traffic between servers, increasing capacity, improving server performance and providing redundancy with failover in the event of hardware or application failure.

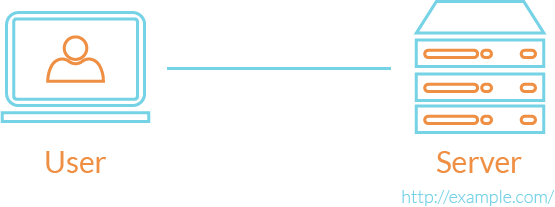

The entire environment resides on a single server. For a typical web application, that would include the web server, application server, and database server. A common variation of this setup is a LAMP stack, which stands for Linux, Apache, MySQL, and PHP, on a single server.

Use Case: Good for setting up an application quickly, as it is the simplest setup possible, but it offers little in the way of scalability and component isolation.

PROS

- Simple

CONS

- Application and database contend for the same server resources (CPU, Memory, I/O, etc.) which, aside from possible poor performance, can make it difficult to determine the source (application or database) of poor performance

- Not readily horizontally scalable

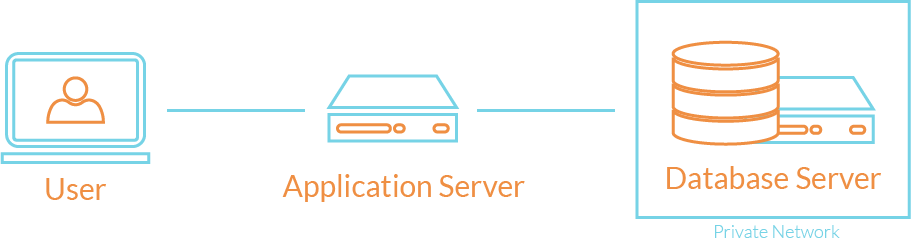

The database management system (DBMS) can be separated from the rest of the environment to eliminate the resource contention between the application and the database, and to increase security by removing the database from the DMZ, or public internet.

Use Case: Good for setting up an application quickly, but keeps application and database from fighting over the same system resources.

PROS

- Application and database tiers do not contend for the same server resources (CPU, Memory, I/O, etc.)

- You may vertically scale each tier separately, by adding more resources to whichever server needs increased capacity

- Depending on your setup, it may increase security by removing your database from the DMZ

CONS

- Application Server without failover may cause bottleneck of failures

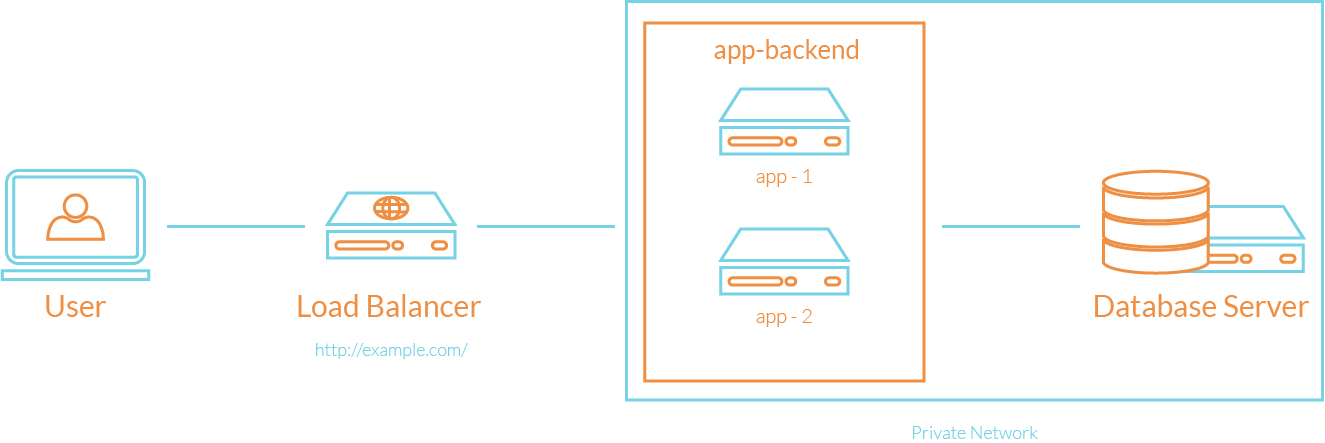

Load balancers can be added to a server environment to improve performance and reliability by distributing the workload across multiple servers. If one of the servers that is load balanced fails, the other servers will handle the incoming traffic until the failed server becomes healthy again. It can also be used to serve multiple applications through the same domain and port, by using a layer 7 (application layer) reverse proxy.

Examples of software capable of reverse proxy load balancing: HAProxy, Nginx, and Varnish.

Use Case: Useful in an environment that requires scaling by adding more servers, also known as horizontal scaling.

PROS

- Enables horizontal scaling, i.e. environment capacity can be scaled by adding more servers to it

- Can protect against DDOS attacks by limiting client connections to a sensible amount and frequency

CONS

- The load balancer is a single point of failure; if it goes down, your whole service can go down. A high availability (HA) setup is an infrastructure without a single point of failure.

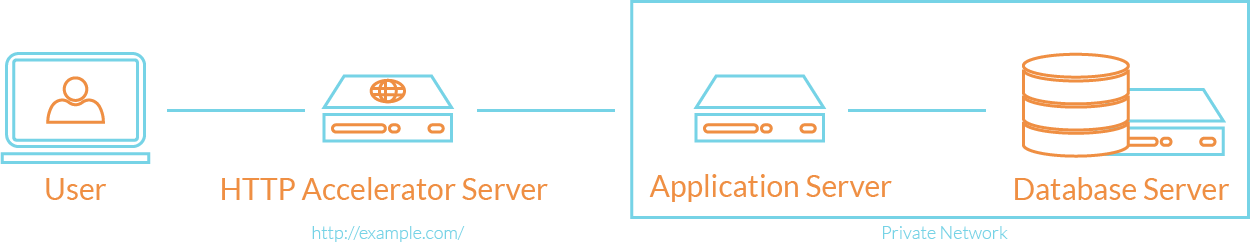

An HTTP accelerator, or caching HTTP reverse proxy, can be used to reduce the time it takes to serve content to a user through a variety of techniques. The main technique employed with an HTTP accelerator is caching responses from a web or application server in memory, so future requests for the same content can be served quickly, with less unnecessary interaction with the web or application servers.

Examples of software capable of HTTP acceleration: Varnish, Squid, Nginx.

Use Case: Useful in an environment with content-heavy dynamic web applications, or with many commonly accessed files.

PROS

- Increase site performance by reducing CPU load on web server, through caching and compression, thereby increasing user capacity

- Can be used as a reverse proxy load balancer

- Some caching software can protect against DDOS attacks

CONS

- Requires tuning to get best performance out of it

- If the cache-hit rate is low, it could reduce performance

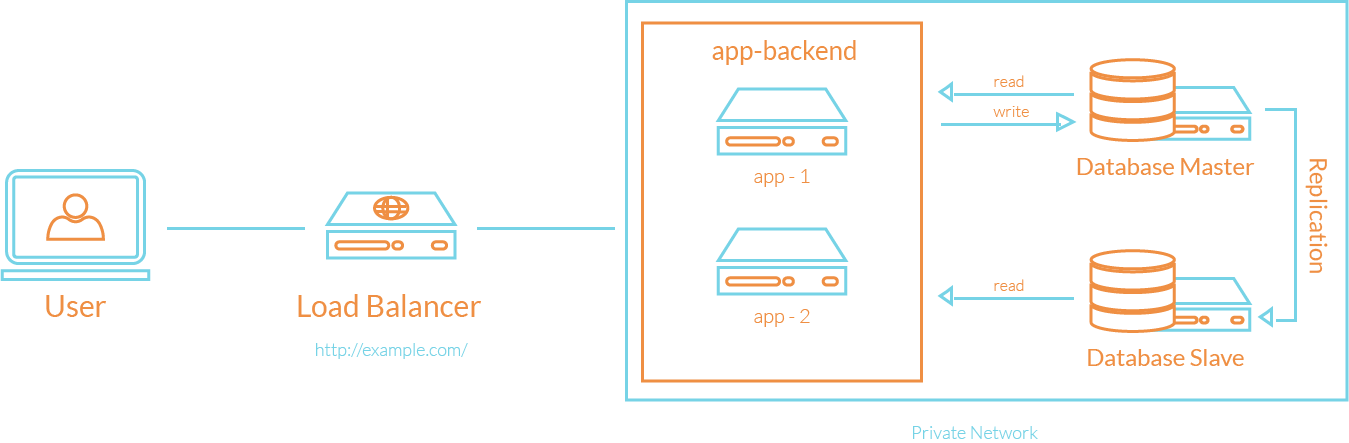

One way to improve performance of a database system that performs many reads compared to writes, such as a CMS, is to use master-slave database replication. Master-slave replication requires a master and one or more slave nodes. In this setup, all updates are sent to the master node and reads can be distributed across all nodes.

Use Case: Good for increasing the read performance for the database tier of an application.

PROS

- Improves database read performance by spreading reads across slaves

- Can improve write performance by using master exclusively for updates (it spends no time serving read requests)

CONS

- The application accessing the database must have a mechanism to determine which database nodes it should send update and read requests to

- Updates to slaves are asynchronous, so there is a chance that their contents could be out of date

- If the master fails, no updates can be performed on the database until the issue is corrected

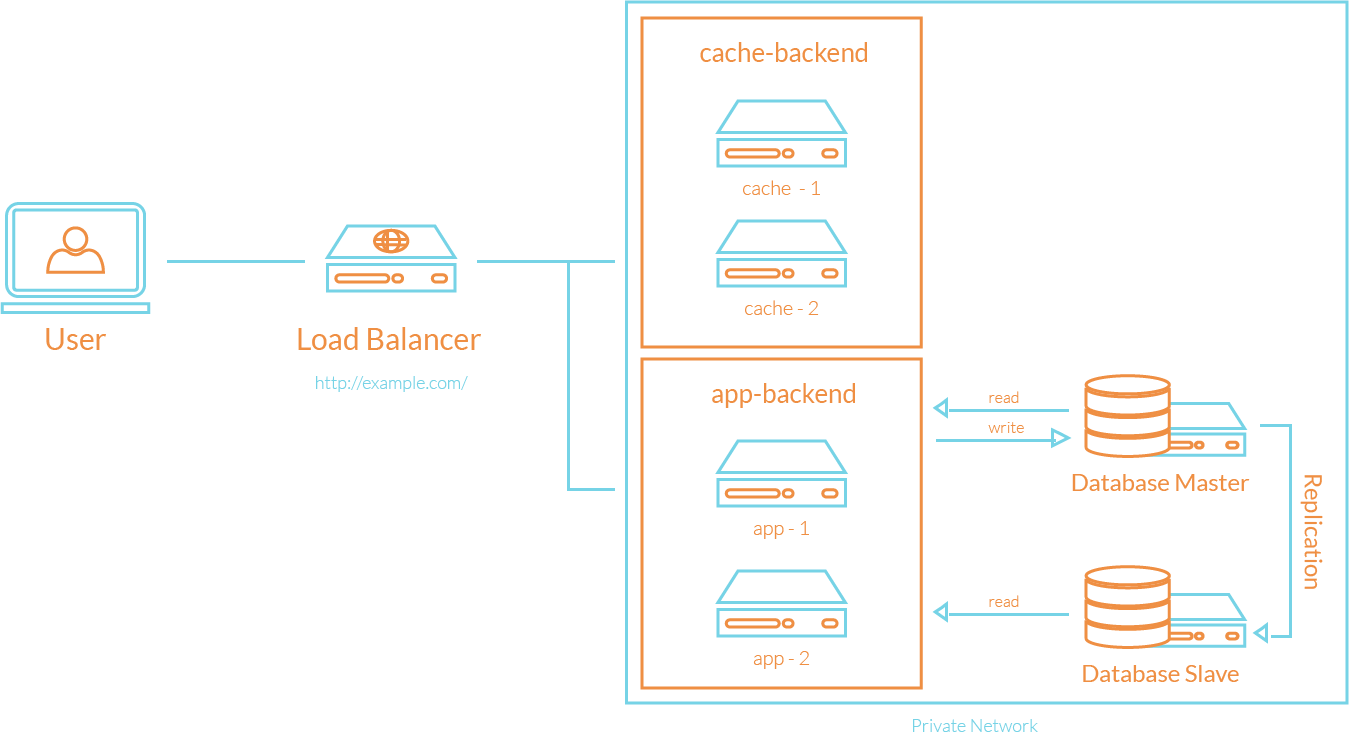

Let’s assume that the load balancer is configured to recognize static requests (like images, css, javascript, etc.) and send those requests directly to the caching servers, and send other requests to the application servers.

Here is a description of what would happen when a user sends a requests dynamic content:

- The user requests dynamic content from http://example.com/ (load balancer)

- The load balancer sends request to app-backend

- app-backend reads from the database and returns requested content to load balancer

- The load balancer returns requested data to the user

If the user requests static content:

- The load balancer checks cache-backend to see if the requested content is cached (cache-hit) or not (cache-miss)

- If cache-hit: return the requested content to the load balancer and jump to Step 7. If cache-miss: the cache server forwards the request to app-backend, through the load balancer

- The load balancer forwards the request through to app-backend

- app-backend reads from the database then returns requested content to the load balancer

- The load balancer forwards the response to cache-backend

- cache-backend caches the content then returns it to the load balancer

- The load balancer returns requested data to the user

This environment still has two single points of failure (load balancer and master database server), but it provides the all of the other reliability and performance benefits that were described in each section above.